AI long-term memory is the missing ingredient in modern intelligence systems. Your AI can solve differential equations and write Shakespearean sonnets—but ask it what you discussed yesterday and it draws a blank. That emptiness isn’t quirky behavior. It’s the direct result of how today’s large language models are architected.

The Core Problem: Why Today’s AI Still Forgets Everything

Large language models operate inside fixed context windows—128,000 tokens, maybe 200,000 if you’re lucky. Once a conversation grows beyond that boundary, old information simply falls off the edge. The model doesn’t “forget” in the human sense. It never stored anything at all.

Think of a brilliant consultant with acute amnesia. They can build a deck, rewrite your strategy, debug the entire codebase—but they can’t remember your name from Tuesday. Every new interaction is a reset.

This architectural constraint has consequences. Without persistent memory, AIs can’t maintain personalization. They can’t behave consistently or track long-term goals. They can’t build relationships, professional or otherwise.

It’s not surprising that memory became the center of gravity in 2024 and 2025. Both Claude and ChatGPT rolled out long-term memory as flagship features—not optional perks, but fundamental updates to how users work with AI.

Why Lack of Memory Prevents AI from Becoming True Agents

We toss around the word “agent,” but few systems today actually qualify.

Tools react. Agents remember, adapt, plan, and pursue goals over time.

An agent without memory is a contradiction in terms. It cannot:

- behave proactively

- make consistent decisions

- learn from interaction history

- maintain an evolving understanding of its user

- accumulate experience across sessions

Gartner’s research makes this explicit: memory management sits alongside planning, reasoning, and tool use as core infrastructure for autonomous AI. Without memory, everything else collapses into momentary performance without continuity.

Why AI Memory Has Become a Defining Competitive Advantage

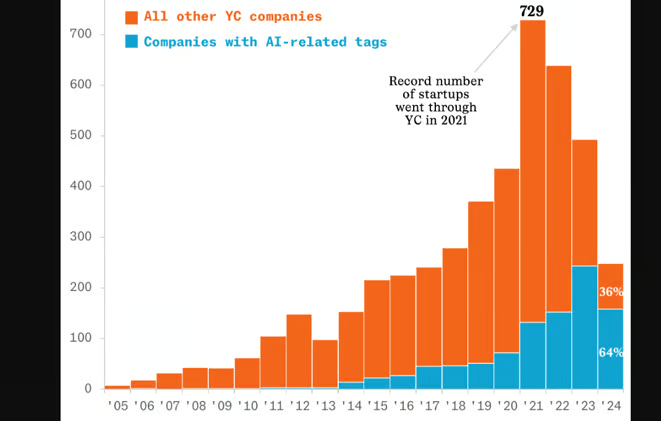

By late 2024, the industry line shifted. Memory stopped being a research curiosity and became table stakes.

Claude, ChatGPT, and the Long-Term Memory Turning Point

When Claude rolled out memory to all paid subscribers in October 2025—after ChatGPT’s earlier launch—it marked a clear inflection point. These systems no longer treated memory like a beta experiment. They elevated it to the center of the user experience.

Cross-session context. Remembered preferences. Accumulated understanding.

For anyone juggling complex projects, this shift turned AI from a disposable assistant into a persistent collaborator.

When multiple industry leaders converge on the same capability at the same moment, the signal is hard to ignore.

The Problem: Fragmented Memory Solutions

The industry agrees memory matters. It doesn’t agree on how to build it.

Existing approaches force tradeoffs that shouldn’t exist:

speed vs. accuracy, precision vs. reliability, simplicity vs. capability.

Chatbot-centric memory systems fall apart in multi-user settings. Customer-support memory systems fail in personal assistant roles. RAG—still the backbone of many solutions—misses subtle relationships. Vector similarity ignores nuance. Keyword matching ignores meaning. Neither captures human-like memory: layered, interconnected, temporal.

Which brings us to the team that tackled the problem differently.

EverMind’s Breakthrough: What EverMemOS Brings to AI Memory

While the industry debated architecture, EverMind—born from Shanda Group—built an actual working system designed around how memory should function in agents.

What Is EverMemOS? A Long-Term Memory Operating System for AI

EverMemOS introduces a shift that seems obvious in hindsight: memory shouldn’t behave like a database. It should behave like a processor.

Most memory tools store past conversations and fetch them when needed. They act like archives—static, passive, descriptive.

EverMemOS treats memory as an active reasoning substrate. It doesn’t just pull relevant history into the prompt. It synthesizes, evaluates, and integrates memory directly into the agent’s cognitive loop.

The results show up in benchmarks:

- 92.3% on LoCoMo

- 82% on LongMemEval-S

Both significantly above previous state-of-the-art.

These aren’t cherry-picked numbers—they reflect real-world tasks: multi-session reasoning, temporal logic, information updates, and deciding when not to answer.

EverMemOS succeeds because it doesn’t merely retrieve. It understands how memories shape responses.

From Memory Database to Memory Processor

Here’s the difference in one sentence:

- Most systems: search → retrieve → append.

- EverMemOS: interpret → synthesize → integrate.

Tanka’s real-world deployment hit 92.3% on LoCoMo, the highest reported at rollout. The model feels less like a machine reciting logs and more like a colleague who genuinely recalls what happened and why it mattered.

Brain-Inspired AI Memory: The Temporal Structure Paradigm

EverMind’s architecture doesn’t imitate the brain cosmetically. It borrows the brain’s principles—particularly around time.

Spatial vs. Temporal Intelligence

Chen Tianqiao, founder of the Tianqiao and Chrissy Chen Institute, explains the distinction succinctly:

- LLMs operate in space — static snapshots, massive parameters, instantaneous computation.

- Brains operate in time — continuous integration of past, present, and future.

The modern LLM is a high-resolution camera. The human brain is a film. Intelligence unfolds only when information persists through time.

Human Memory as Blueprint, Not Metaphor

Evolution solved memory long before AI:

- Hippocampus → fast indexing and retrieval

- Cortex → long-term distributed storage

- Prefrontal cortex → planning, reasoning, executive control

EverMemOS mirrors these functions computationally. The result isn’t a brain replica—it’s a memory system that behaves more like a biological intelligence than a data pipeline.

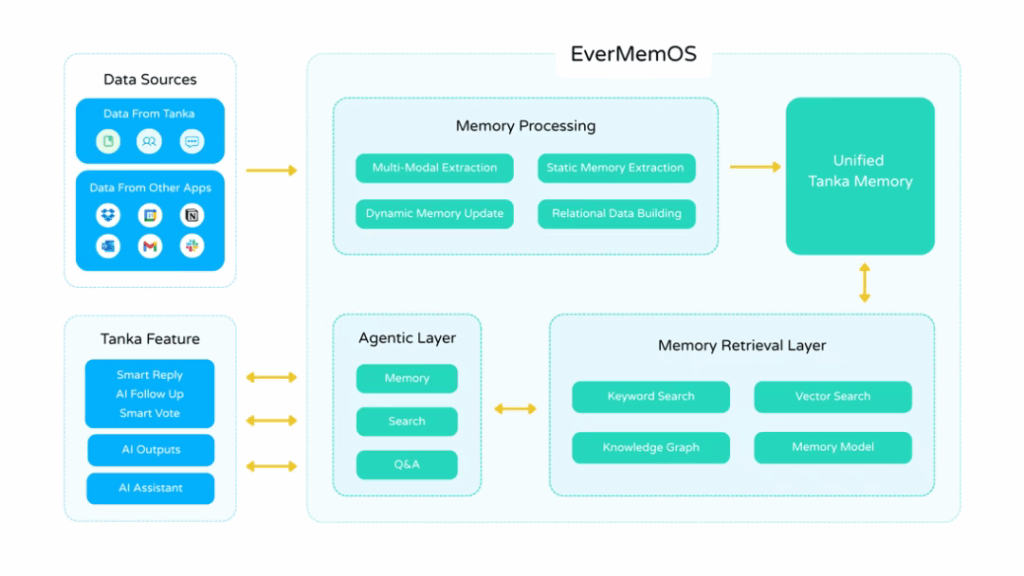

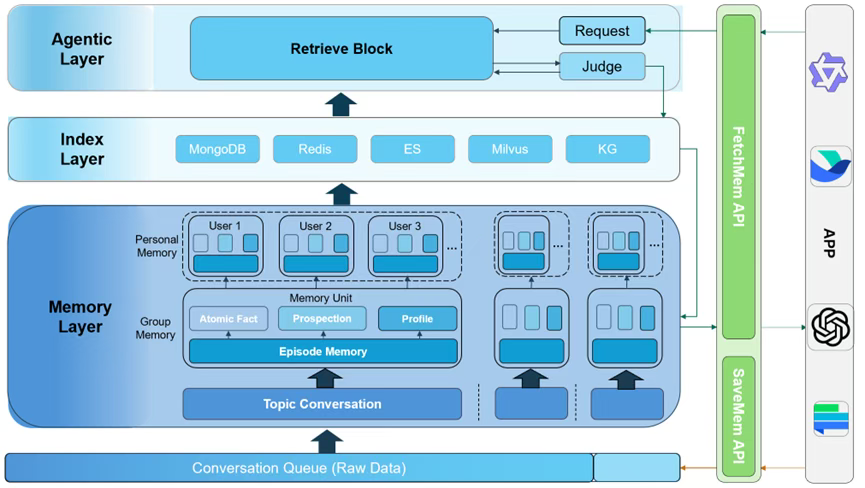

Inside EverMemOS: The Four-Layer Memory Architecture

EverMemOS organizes memory like a cognitive engine rather than a storage stack. Its four layers mirror the major functional regions of the brain.

Layer 1: Agent Layer (Planning & Reasoning)

The “prefrontal cortex.” It interprets tasks, plans responses, and decides which memories matter. It’s where meaning—and agency—emerge.

Layer 2: Memory Layer (Long-Term Structured Storage)

Like the cortex, it consolidates long-term knowledge: episodic memories, user profiles, preference graphs, semantic facts. Not every detail becomes memory. The system decides what’s worth storing.

Layer 3: Index Layer (Fast Retrieval Across Modalities)

Multiple retrieval strategies run in parallel: semantic vectors for meaning, keyword indexes for precision, knowledge graphs for relationships. This diversity gives the system human-like recall.

Layer 4: Interface Layer (APIs & Integration)

The sensory layer. APIs, enterprise connectors, and MCP support allow EverMemOS to slot into existing systems without ripping out infrastructure.

Together, these layers form a cognitive loop: perceive → index → recall → integrate → act.

Key Innovations That Set EverMemOS Apart

These aren’t incremental upgrades. They’re a rethinking of what AI memory should do.

1. Hierarchical Memory Extraction

Instead of flat text chunks, EverMemOS extracts episodic units, groups them by theme, understands cause-and-effect chains, and ranks importance.

Ask about a project → it retrieves the entire context, not a keyword hit list.

2. Mixed Retrieval With RRF Fusion

Semantic search + keyword search + RRF fusion = far higher relevance.

Example:

“What should I eat?” after dental surgery.

Semantic search recalls your food preferences. Keyword search recalls your surgery. RRF fuses them, producing context-aware recommendations.

3. Agentic Smart Retrieval

When initial searches fall short, the system generates new queries—just like a human trying different mental angles to recall something. It fills information gaps rather than pretending they don’t exist.

4. Fast Mode for Real-Time Use Cases

Some tasks require depth; others require speed. EverMemOS adapts, skipping heavy processing when milliseconds matter.

Real-World Applications of AI Memory Systems

Memory unlocks use cases that were previously impossible.

Enterprise Applications

- CRM: not just transactions but preferences, tone, communication patterns.

- Knowledge management: capturing institutional memory that currently evaporates when employees leave.

- Decision support: surfacing historical parallels, lessons learned, prior outcomes.

Personal AI Agents

Memory turns assistants into companions:

AI tutors that track learning gaps for months.

AI coaches that understand habits.

AI advisors that grow with you.

Multi-Agent and Multi-User Collaboration

Shared memory lets multiple AI agents coordinate like a team—even across departments or projects.

Open-Source Strategy and Developer Ecosystem

EverMind released EverMemOS as open source—a strategic move that echoes the trajectory of Linux, Kubernetes, and PostgreSQL.

Why It Matters

- Transparency builds trust.

- Community accelerates innovation.

- Widespread adoption can turn EverMemOS into the de facto memory standard.

Developer Tools and Frameworks

Complete evaluation suites—LoCoMo, LongMemEval, PersonaMem—let teams test real-world memory tasks without building custom infrastructure.

Cloud Roadmap

An enterprise cloud version will offer managed scaling, SLAs, and security—a hybrid model that mirrors successful open-core companies.

The Future of AI Memory: What Comes Next

Memory Becomes a Standard Infrastructure Layer

Within two to three years, AI without memory will feel as outdated as apps without internet access.

Continuous Identity and Long-Term Consistency

Memory creates stable personality traits in AI. Not artificial “quirks,” but consistent behavior built from accumulated experience.

Ethical and Philosophical Questions

As AI systems develop continuity and identity, questions emerge:

What does it mean for an AI to have years of memory?

Where do we draw boundaries around data ownership, privacy, and emotional dependence?

These aren’t hypothetical. Systems like EverMemOS already maintain coherent memories that span months.

Conclusion: Memory Unlocks the Path to Real AI Intelligence

Memory isn’t a feature. It’s the missing dimension that transforms AI from reactive tools into proactive partners.

Without memory, AI remains stuck in the eternal present—brilliant but shallow, capable but forgetful, powerful but inconsistent.

EverMemOS demonstrates that brain-inspired architecture can deliver practical, measurable benefits today. Its benchmarks validate the approach. Its open-source release opens the door for widespread adoption.

The future of AI runs through memory.

Systems that remember can understand.

Systems that understand can collaborate.

Systems that collaborate can evolve.

And that is where intelligence truly begins.

Tags:

Subscribe To Get Update Latest Blog Post

[mc4wp_form id=664]

Leave Your Comment: